A/B testing is like cooking for friends; you never know unless you attempt what dish they would adore. For digital marketers, it’s about discovering what works, methodically rather than about speculating. Consider it as a magic tool showing how little adjustments—such as changing a headline or button—can significantly increase your outcomes.

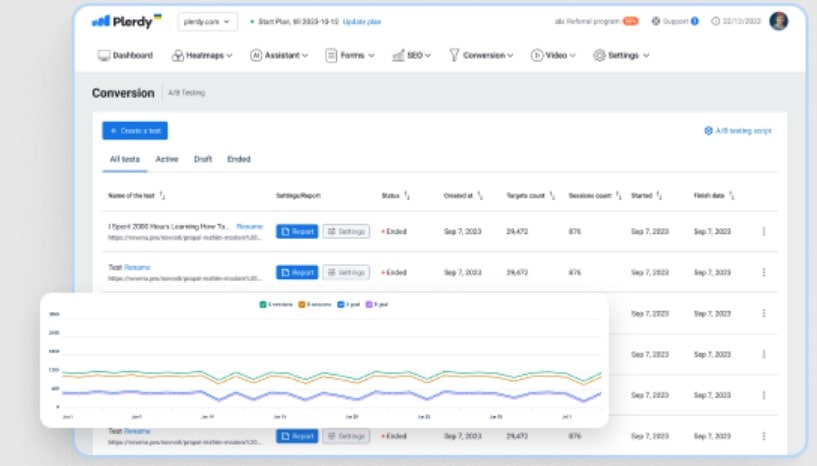

You check what clicks with your audience using Google Optimize or VWO tools. And let’s face it—you are only shooting darts in the dark without testing. A/B testing lets you know, not assume. Ready to make “meh” campaigns winners? Let us start right away!

Define Clear Goals and Hypotheses

How to Set Specific Goals

You are thus prepared to conduct an A/B test. Still, wait on—what is your ultimate goal? Without particular objectives, you are merely experimenting to see how it turns out. That is a time and effort squandering. Your test aim is obvious, so you can see whether it is working or not.

Start with reasonable, quantifiable targets. Want more people registered? Try for a ten percent increase in your landing page conversion. battling email clicks? Aim for a click-through rate from three percent to five percent. Numbers lead your test; they are like the scoreboard of your marketing game.

A/B testing calls for tracking what? Here is a rapid list:

- Conversion rates—that is, the number of users acting—like buyers.

- Bounce rates—that is, users exiting your page far too quickly—yikes.

- Click-through rates, the magnetic force of your CTA.

Plerdy and Crazy Egg are among the tools that will enable simple monitoring of key indicators. One aim for each test will help you to be quite clear about what has to be changed. Your A/B testing then is strategic rather than merely tossing spaghetti at the wall.

Crafting Hypotheses That Drive Results

Every A/B test begins with a hypothesis—a fancy term for your estimate. It is not, however, a haphazard concept. It requires organization. Consider it as follows: What improvement could bring about?

Your hypothesis should use the SMART approach—specific, measurable, attainable, relevant, and time-bound. Say, for instance, “We think changing the button color from red to green will increase sign-ups by 15% in three weeks,” instead of ” Changing the button color might help.”

Review your problem areas to form a reasonable test hypothesis. Received a poor CTA. Test other text or button sizes. Users bouncing quick? Work on page layout. Every theory should point out one obvious problem and offer a fix.

And don’t overlook documenting it. Clear records help A/B testing to be most successful. Keep it straightforward and targeted for best outcomes; something like “testing a new email subject line will improve open rates by 10% in two weeks.”

Strategies for Giving High-Impact Goals Top Priority

- Where in your metrics are poor—low conversions, large bounce rates, or small clicks?

- Sort the locations with most influence—such as CTAs or landing pages.

- Start with modest, reasonable goals. Better a little win than after pursuing large, unachievable ones.

Measured success results from well defined goals and targeted A/B experiments.

Choose the Right Variables to Test

Isolating Key Variables

Simple approaches help A/B testing to be most successful. Modify one thing at a time—just one. Why? Test too many variables, and you will not know what changed. It’s like creating a new recipe but substituting all the elements at once. Was the odd spice the reason the recipe failed, or was the salt? None known.

Start with components having significant impact. Check your button location; move it either above the fold or near the headline. Highlights? Try something more crunchy or add numerals. Little changes, such as altering a product appearance, might surprisingly produce significant effects.

Recall, it is not about speculation. Choose your variable from the data. Plerdy and other tools can indicate where users drop off or most click. You start testing at that point. Little, deliberate changes add up to great benefits. Keep your A/B test targeted; the results will show you the path.

Avoiding Common Pitfalls in Variable Selection

The deal is not test everything at once. How will you determine which of two headlines and button colors you changed in the same A/B test worked? Neither will you. The biggest error beginners make is that one.

Steer clear of testing meaningless items as well. On a footer, changing font size? Time’s waste. Pay close attention to strong components including images or CTAs. Every time one test, one variable.

Examples of Variables to Prioritize

- Text, color, or placement—call to action (CTA).

- Headline: keyword, length, or tone.

- Product images: many points of view, colors, or designs.

- Forms: Count of fields, arrangement, or button text.

Your A/B findings will actually mean anything when you are clear on what to test. Keep things basic and orderly; let the data speak for itself.

Set Up Statistically Valid Tests

Calculating Sample Size and Test Duration

Beginning an A/B test devoid of sufficient data? That’s like running a race blindfolded—exciting yet you will run against a wall. You have to figure out the appropriate sample size and choose test length before starting yours. This guarantees that your findings are not only lucky guesses but are dependable.

Handle these computations with Plerdy, Optimizely, or VWO. These instruments examine your site traffic, present conversion rates, and expected change in minimum detectable effect—that is, change in your desired outcome. For example, each variation in an A/B test may need 500 visitors to establish statistical significance if your website gets 1,000 daily visits. Basic arithmetic Not at all Basic arithmetic is Definitely.

How long therefore should your A/B test run last? Two weeks or more at least. User behavior changes during the week; Monday buyers are not like Saturday ones. Running your test through a full cycle picks all these trends. And even if the outcomes seem clear-cut, do not dare stop early. A/B testing calls for patience most of all. Instant stoppage equate to lost information.

Ensuring Test Consistency

Consistency becomes the first rule in A/B testing. Your versions differ mostly from each other in the variable you are testing. Testing a headline? Cool; leave the remainder of the page unaltered. Changing the color of the buttons? Ignore changing the font as well. Mixed shifts translate into chaotic outcomes.

And keep in mind performance. If Version B loads slower than Version A, you are testing user patience rather than your variable. Broken links or faulty features? That is a fast approach to fail your exam. Review everything twice before starting “Go.”

A checklist for a technically sound setup

- Apart from the test variable, both A and B should have the same designs.

- Tools like Google Optimize guarantee a 50/50 balance of traffic equally.

- Track everything using Google Analytics or Plerdy to record correct counts.

- Mobile-friendly: Your A/B test need to go without a hitch on all devices.

- For useful data, at least 500 people visit each edition.

A solid A/B test design is about running trials right, not only about doing them. Use this approach, and you will test smarter rather than harder.

Target the Right Audience

Segmentation Strategies for Effective A/B Testing

An A/B test cannot be set up whereby all of your users are thrown into one large group. Key is breaking out your audience. Testing a homepage on people not interested in your website? waste of time. You must target the proper audience to make your A/B testing relevant.

Address demographics first. Sort users by employment position, location, or age. Test a product page on 25–35-year-olds, for instance, and then compare it to senior reaction. They will most certainly behave differently. One even better is behavioral segmentation. Pay close attention to consumers who last week abandoned their baskets or clicked an email but did not convert. These organizations provide actual insights.

Tools for slicing and chopping your audience include Plerdy, HubSpot, or VWO. Create smaller groups from your users and execute customized A/B tests. This is data-driven, intelligent testing technique not conjecture.

Using Analytics to Identify Key Segments

Your friend in A/B testing is analytics tools. Platforms like Google Analytics monitor everything: who’s bouncing, who’s shopping, and who’s just lurking? This information can help you decide which audience clusters demand testing.

Examine visitor sources. Paid clicks are not like organic traffic; it behaves. Device kinds also count; desktop users and mobile users typically require somewhat different experiences. You are ready to execute an A/B test with actual impact once you identify your audience.

Common Segmentation Criteria

Segment your audience for A/B testing using:

- Demographics: income level, location, or age;

- Behavior: Email opens, dropped-off carts, visits to product pages.

- Traffic Source: Social referrals, sponsored adverts, or natural search.

- Device Type: Desktop vs. Mobile

Your A/B test can be made or broken by the correct segmentation. Testing intelligently will expose ideas that change your approach.

Run Tests at the Right Time

Timing Your Tests for Optimal Results

Conducting an A/B test requires more than just setup; timing counts. Big time. Imagine trying your holiday sales website in February—what good is that? Accurate outcomes depend on avoiding seasonal biases and erratic user behavior.

Choose a stable period during consistent traffic. For Black Friday, for instance, steer clear of running tests unless your test is particular to holiday campaigns. Generally speaking, most A/B testing find a sweet spot in a two-week period. This provides enough information without dragging things endlessly.

Think about user behavior as well. While weekends are more about leisure shopping, weekdays could bring in serious customers. Before beginning your test, you may examine traffic patterns using tools like Plerdy or Google Optimize. The aim is to coincide your testing time with the most active and regular period of activity of your audience. A badly scheduled A/B test is work lost.

When Not to Conduct A/B Tests

Sometimes it is a poor idea to test. See little traffic? Ignore it; your results won’t have statistical relevance. Starting a fresh product line? Wait for the buzz to pass. Testing under unstable conditions—such as site changes or technical problems—skews the data and renders your A/B results meaningless.

Also don’t overlook seasonality. Testing over vacations or off-peak times could mislead you. Always seek for times when your audience acts normally.

Best and Worst Times to Test

Greatest times:

- Tuesday through Thursday, mid-week.

- During constant site traffic.

- Non-holiday times unless holiday campaigns are under test.

Evil times:

- Black Friday or Cyber Monday unless seasonal.

- significant site downtime or changes.

- When traffic comes in under 500 daily visits.

Timing either ruins or makes your A/B test. Get it wrong and you are looking for phantom outcomes. You’re optimizing like a professional when you get it properly.

Analyze Results with Precision

Understanding Statistical Significance

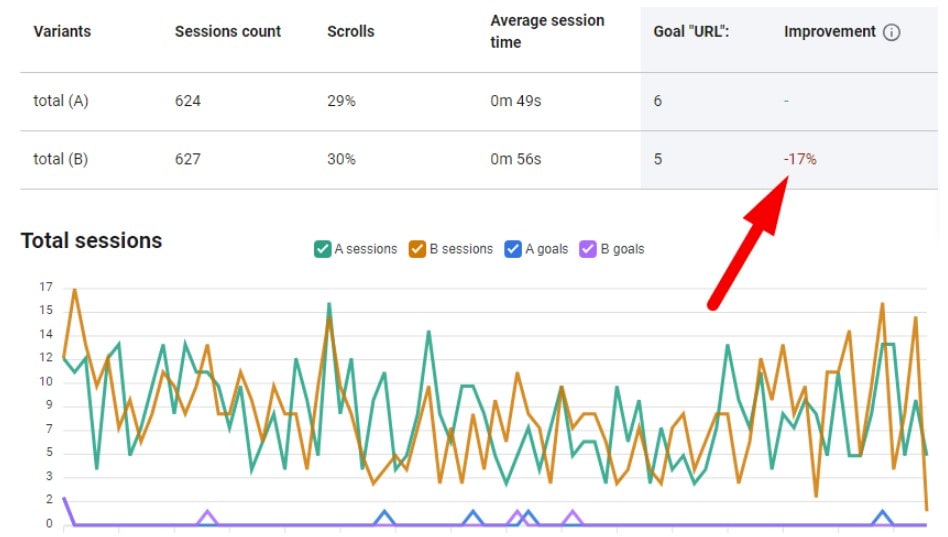

You thus run your A/B test. It is now time to examine the findings and arrange all those figures. Not too worried—it’s simpler than it seems.

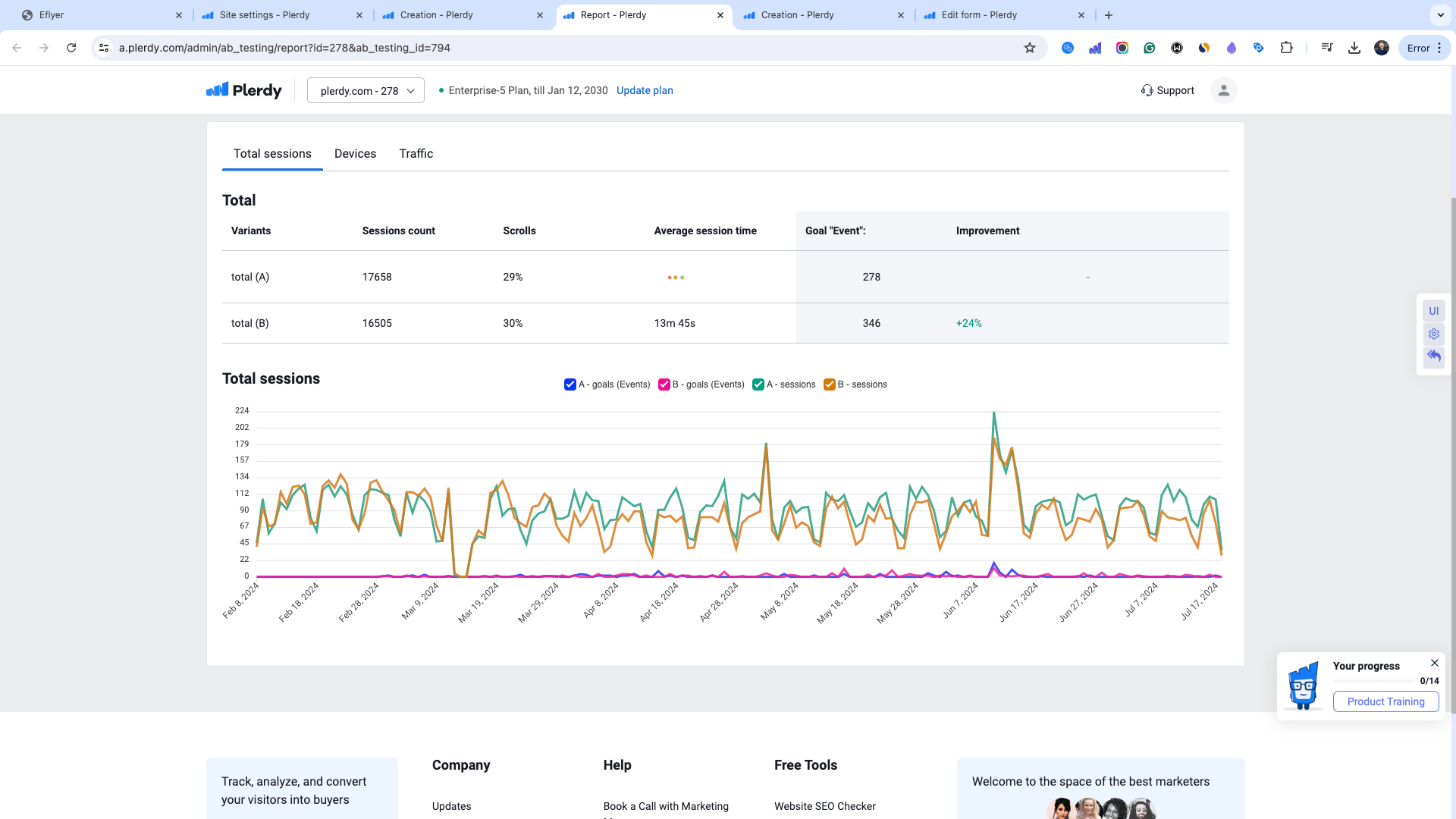

What gives your A/B test credibility is statistical significance. < 0.05 a p-value? The magic number is that one. You are thus 95% certain that your test did not happen by mistake. Anything more? Your findings might just be a fluke, hence another test would be required. Confidence levels operate similarly; 95% is the norm, but for minor adjustments, 90% might be sufficient. These numbers can be crunching for you by tools like Plerdy, VWO, or Optimizely, so saving you a headache. And keep in mind a test comprising just ten participants? That is not testing; that is speculation.

Interpreting Test Data

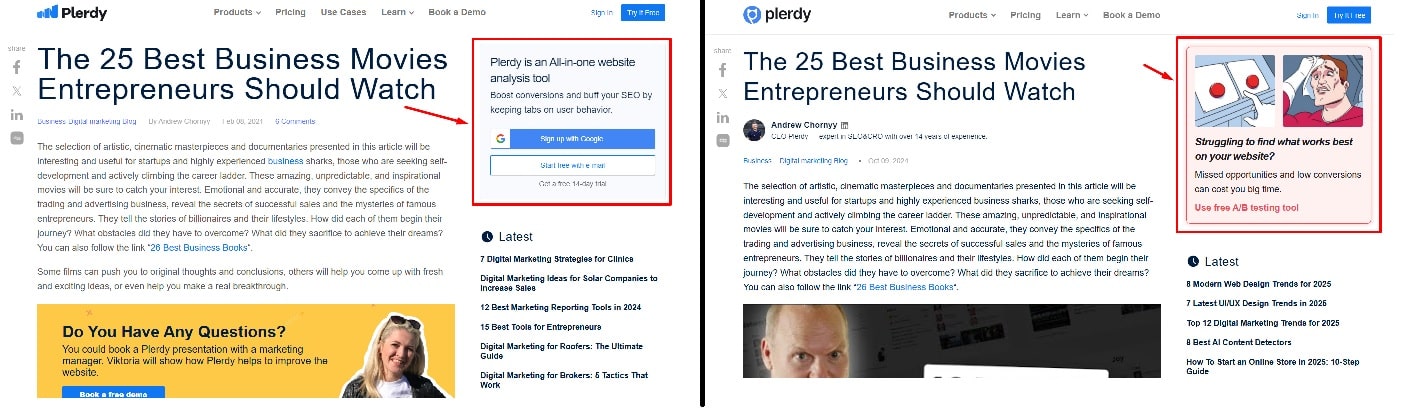

Your A/B test findings show here. These days? Start with your main statistic—probably revenue, conversion rate, or CTR. Was the measure better? If so, you will be successful. If not, you should investigate further.

Look at the variations between Version A and Version B; did one clearly outperform the other? Big gains can come with even a 2% increase on a busy page. Keep object-oriented. It doesn’t mean that Version A is the champion just because you enjoyed it. The facts scream louder than personal beliefs.

Methods for understanding test results:

- Pay especially attention to the main KPI.

- Verify statistical significance twice—hello, p-values!

- See performance variations by comparing sections.

- Chart and insight features for Google Data Studio help you

Stay with the figures; avoid your emotions.

Avoiding Common Analytical Mistakes

A/B testing is difficult, thus even the best of marketers make mistakes. Steer clear of these land mines.

- Let the test go through; don’t pronounce a winner too soon.

- Inconsclusive information? Do not compel it. Rerun the A/B experiment.

- Steer clear of vanity metrics. High clicks but not a conversion? There is no win from that.

Only until genuine, quantifiable outcomes follow from an A/B test will it be successful.

Post-test data analysis checklist

- Verify if the sample size satisfies the statistical criterion.

- Go over pre-defined KPIs and cut off pointless measurements.

- Search for audience anomalies or erratic traffic surges.

- Record every outcome for your next A/B test.

Every A/B test advances the direction of better decisions. Stay curious, analyze precisely, and let the facts guide your next action.

Share and Apply Results

Communicating Findings Effectively

Your A/B test comes back with brilliant fresh findings. Now, share the word; keep them not only for yourself. The greatest approach to guarantee that the test helps the entire team is to share results.

Begin basic. Illustration of what worked and what didn’t might be graphs or charts. A PowerPoint show including “before and after” screenshots? Continually a hit. Emphasize the important statistic—conversion rate, CTR, or whatever you tested—because numbers speak louder than words. Furthermore make it relevant. Say, “We boosted click-throughs by twenty percent—that’s 200 daily extra clicks!That gives the win significant weight. Tools like Plerdy or Google Data Studio can enable you create simple yet elegant presentations.

Presenting calls for more than just reporting results. Discuss what didn’t work very well. Perhaps Version A was a flop; but, why? Just as valuable are lessons learned from mistakes as successes.

Scaling Successful Variations

Obtained a winning A/B test result? Time to go large but not overly quickly. Roll out modifications in phases to guarantee seamless scaling.

Start with the tested page or element—like a CTA or headline. Track it attentively once the modification site-wide is implemented. Maintaining the 15% increase in conversions, did it? Sure, fantastic! If not, probe the statistics more.

Apply the variance then to related pages. Try a green button on your blog or checkout flow, for instance, if one worked on a product page. Run micro-tests in new areas; never assume one test result fits all.

Like cooking, scaling is about balance. Taste while you travel. Manage this rollout process easily using VWO or Optimizely. They reduce risks and maintain organization of things.

Important Notables for Post-Test Reports

- A brief review of the test—what, why, how?

- Clear, graphically or table-based result graphics.

- Test insights—what worked, what didn’t.

- Action items—next testing, scale adjustments.

- Advice for next A/B trials.

Sharing is caring; in A/B testing, it’s how you convert facts into more wins and smarter decisions. Count those reports to be valuable!

Build a Culture of Experimentation

Encouraging a Data-Driven Mindset

Your staff should love A/B tests just as much as they love coffee breaks. Start with education if you wish to create a data-driven culture. Show the team why testing is a strategy for winning rather than only another chore. Describe how little A/B changes—such as changing button colors or headlines—may result in significant gains like 20% increase in conversion rates. When you bring numbers, people pay attention.

Involve everyone. Designers, marketers, even customer service agents might offer ideas on what ought to be tested. Simple technologies like Plerdy or Google Optimize help to prevent overload by others. And keep in mind recognition as well. Honor every A/B test that goes well. That five percent increase in sign-up activity? Pull a virtual champagne! Emphasizing these outcomes generates process trust and excitement.

Keep it clear at last. Create a shared document or dashboard displaying current tests together together together together with their objectives and results. It makes A/B testing a team activity rather than only a marketing concern.

Iterative Testing for Long-Term Gains

One A/B test won’t change the planet. Still, a set of tests? You control this way. Iterative testing is learning, adjusting, and steadily improving step by step. If your first test increases click-through rate, for example, the second one might maximize checkout flow. Little adjustments added over time build up.

See A/B testing as running a marathon rather than a sprint. Though things appear “good enough,” keep testing; trends change, user behavior changes, and yesterday’s successes might not be applicable tomorrow. Tools like Optimizely help one easily handle several tests over time.

Best Practices for Integrating Testing

- Plan weekly activities including testing.

- Give A/B testing highest potential impact top priority.

- To gauge performance, use KPIs—such as bounce rates or conversion rates.

- Use testing tools to automatically handle repetitious chores.

- Record each A/B test outcome for next use.

Establishing a culture whereby testing is second nature helps your team stay ahead of both your rivals and others. Who also doesn’t want to be recognized as the “data rockstar”?

Finish

A/B testing is your secret weapon for development not only a nice gimmick. Following best standards will help you turn wild guesses into wise, fact-based judgments. Whether your audience prefers a minimalist design or a strong call-to- action, testing helps you find what they truly desire.

Running A/B tests right creates a plan that succeeds every single time, not only helps to optimize campaigns. Though tools like Plerdy or Optimizely simplify things, the attitude counts most. Thus, welcome the statistics, rely on the procedure, and see how your conversion rates increase. wise judgements Success is developed in this way.