Imagine tossing a coin to choose between vanilla and chocolate ice cream; this will help you to understand A/B testing—only it is far more potent and less dependent on chance! Fundamentally, A/B testing is a methodical procedure used by marketers to find whether version of a webpage, app, or commercial generates better results. By evaluating which of versions A and B performs better, companies may increase user involvement and conversion rates. Here at Plerdy, we use A/B testing to simplify your marketing plans so that every improvement helps you to succeed. Let’s explore how precisely developing hypotheses is essential to maximize your efforts at A/B testing.

Understanding A/B Testing

Ever found it strange why one version of a website gets more hits than another? Here is when A/B testing—a vital instrument for businesses trying to maximize user involvement and convert rates—comes in handy.

Definition and Basics

A/B testing, sometimes known as split testing, contrasts two versions of a webpage, email, or other digital asset (variant A and variant B) to see which generates better in click-through rates or purchase conversions. This method lets you investigate two versions’ hypothesis regarding which is more successful. Sending these versions to a random set of consumers allows marketers to get performance data and make educated decisions regarding whether variant generates higher conversion rates.

Importance in Digital Marketing

In digital marketing, test and optimize abilities is quite essential. A low-risk platform offered by A/B testing lets you create better marketing plans. It is absolutely important to grasp user preferences and behavior. Using A/B testing will help to greatly enhance corporate results and user experience. Testing several call-to- action buttons, for example, allows marketers to see which color, location, or language most boosts user interaction and conversions.

A well-run A/B test can expose minute details influencing user behavior greatly. Equipped with this information, marketers can improve their content, layout, and user paths to more closely satisfy their target market.

Good A/B testing enhances digital resources to satisfy corporate objectives and user requirements. A/B testing provides data-driven insights that help businesses make strategic decisions improving their general performance. Consult reliable sites like HubSpot’s marketing guide or Optimizely’s blog for more reading on the success of A/B testing.

The Role of Hypotheses in A/B Testing

Imagine starting a digital campaign not sure exactly what you want to change. Like sailing without a compass! Hypotheses in A/B testing serve as this compass, directing every test toward unambiguous, practical insights.

Defining Hypothesis

A/B testing hypotheses forecasts of test outcomes. It’s predicated on presumptions about how a given modification in a variant might raise conversion rates. Saying, “If I change this element, then this effect will occur,” gives your testing exactly guidance. Since it defines the framework of your A/B test and provides a targeted goal on what you are seeking to learn or validate, developing a good hypothesis is absolutely necessary.

Application in A/B Testing

Simplifying your testing procedure depends much on a hypothesis. It guides your choice of variant to test and the measurements to use. For example, your A/B test will especially track conversion rates for pages with and without customer testimonials if your theory holds that adding them to a product page will raise conversions. This directed strategy increases statistical significance and saves time and money.

Developing a hypothesis requires analyzing current data, knowing user behavior, and pointing up possible development opportunities. Once formed, the hypothesis directs your test variant production. It directs your research and clarifies whether the variations in your variant actually influence user behavior or whether any noted variations are random.

Hypotheses are fundamental to the success of every A/B testing campaign; they are not only informed guesses. They give the required direction and concentration to greatly raise conversion rates and general user involvement. For anyone wishing to explore further in developing successful hypotheses, resources include VWO’s testing guide or Neil Patel’s blog on hypothesis generation are priceless.

Testing and Refining Your Hypotheses

Ever wonder whether your A/B testing could accomplish more? Testing and honing hypotheses is the optimization powerhouse, not only a stage in the process.

Setting Up the Test

Establishing your A/B test requires choosing appropriate instruments and approaches to quickly test your ideas. This includes deciding which part of your audience you should test the variant versus the control. Make sure every section is big enough to produce statistically noteworthy findings. Implement and monitor each variation using strong A/B testing tools like Google Optimize or Optimizely. Crucially for a dependable test, these technologies offer the infrastructure to run variants concurrently and compile real-time data.

Analyzing Results

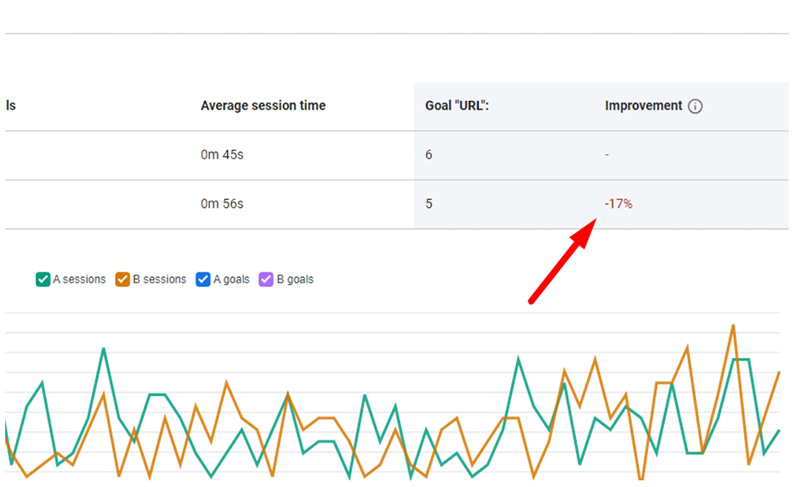

Review the results of your test to discover whether the changes in your variant raised conversion rates. Beyond mere measurements, this study should probe deeper insights such user engagement length and interaction quality. Sort the data using analytical instruments in search of trends supporting or negating your theory. Should the first theory be validated, take into account permanently using the successful variation. If the data are equivocal or the hypothesis is disproved, however, improving your hypothesis depending on the acquired insights and retesting is really vital. This iterative process guarantees that every test moves you closer to refining your user experience and increasing conversions, thereby helping you to refine your approach.

An iterative process of hypothesis testing, analysis, and improvement, A/B testing is Every cycle sharpens your marketing plans and customizes your website or app to more successfully satisfy user expectations, thereby increasing conversions and guaranteeing optimal performance of your digital assets. Visiting credible sources like Neil Patel’s tutorial on evaluating A/B test results might provide extra insights for individuals eager in a closer exploration of analytics for A/B testing.

Real-World Examples of A/B Testing Hypotheses

In digital marketing, what results from theory meeting practice? Let’s look at actual case studies of how well A/B testing hypotheses have been developed and implemented to provide interesting findings and increased conversions.

E-commerce

One massive online retailer sought to raise the product page conversion rate. Adding user feedback would, according to the theory, foster trust and inspire buying. They created two variations: one without reviews and one with rather obvious displays. The test was designed in several product categories to guarantee a large and noteworthy data collection. Following one month of testing, the variant with customer reviews clearly raised conversion rates by 18%. This success resulted in a site-wide customer review implementation. This case emphasizes the need of A/B testing in forming data-driven judgments that favorably influence consumer confidence and sales.

Email Marketing

Customizing email subject lines would, according to a travel agency, increase open rates. They developed two subject lines—one with and one without the first name of the addressee. Targeting thousands of members, the test ran over a sequence of advertising efforts. Open rates from the tailored emails were 29% higher than those from the generic ones. This trial confirmed the theory and guided the marketing team to modify their email marketing plan to incorporate more personalizing aspects, therefore increasing general involvement and conversion rates.

These cases show how effectively well-developed A/B testing hypotheses could produce improved corporate results. Testing and honing hypotheses helps companies to acquire insights that enhance performance and strategy development. See Harvard Business Review’s views on A/B testing to learn more about how it might change corporate tactics.

Advanced Techniques in Hypothesis Testing

Advanced hypothesis testing techniques help marketers and analysts enhance strategy and conversion rates as the digital world gets more complex.

Multivariate Testing

Multivariate testing expands the ideas of A/B testing by concurrently evaluating several variables. This method lets you test numerous element combinations of changes across multiple parts of a webpage or campaign to find which one works best. An e-commerce site might create numerous variations of its homepage, for instance, each with distinct photos, headline copy, and button colors. Through the analysis of the interactions among these factors, advertisers may identify which mix best increases user involvement and conversion rates. This approach is especially strong but ideal for bigger sites or campaigns since it depends on considerable traffic to reach statistical relevance.

Segmentation in A/B Testing

Segmentation fits hypothesis testing to user groups based on geography, behavior, or age. This method helps companies create more focused, successful variations for every market, therefore improving the test result accuracy. A streaming service might, for example, test two separate promotional offers—one for new members and another for lapsed consumers. Through audience segmentation and customizing of the offers, the provider may better identify which incentives motivate conversions for every group, so improving their marketing budget and approach.

Multivariate testing and segmentation are among advanced hypothesis testing techniques that enable companies to better their digital marketing and acquire deeper understanding. These strategies enable the identification of audience segments that convert, therefore raising the ROI and conversion rates. Resources like Optimizely’s guide to multivariate testing offer thorough understanding for a deeper exploration of sophisticated hypothesis testing techniques.

A/B Testing Tools and Resources

Your A/B testing approach can be made or destroyed with the correct tools. Choosing among the several platforms now available for designing, running, and testing analysis depends on what best fits your requirements.

Top A/B Testing Platforms

Leading tool in A/B testing, Optimizely offers strong capabilities for companies trying to test, create, and maximize user experiences across websites and applications. Its simple UI lets marketers effectively set up and execute tests, measuring variation performance and conversion statistics to get practical insights. Google Optimize runs efficient tests simpler for smaller teams or those with limited budgets since it interfaces naturally with Google Analytics. Both systems support multivariate testing and offer comprehensive data that enable marketing plans to be refined and conversion rates to be raised.

Additional Resources

Resources like Ron Kohavi et al.’s “Trustworthy Online Controlled Experiments” provide thorough comprehension of A/B testing’s science for individuals wishing to learn more about it. Websites such as ABTestGuide.com offer interactive calculators and graphic aids to assist in the development of hypotheses and more intuitively grasp test findings. The CXL Institute provides case studies from basic to advanced approaches as well as A/B testing courses.

Any effective A/B testing plan depends on you arming yourself with the correct instruments and tools. Powerful tools and intelligent training resources will enable you test, grow, and maximize for conversions regardless of your marketing experience. For more research, think about visiting reputable sites as CXL’s A/B testing blog or Optimizely’s resource center.

Utilizing Plerdy in A/B Testing Hypotheses

Including Plerdy into your A/B testing plan will change the way you create hypotheses and track their performance. Here’s how you might use Plerdy to improve conversion rates and hone your testing strategy.

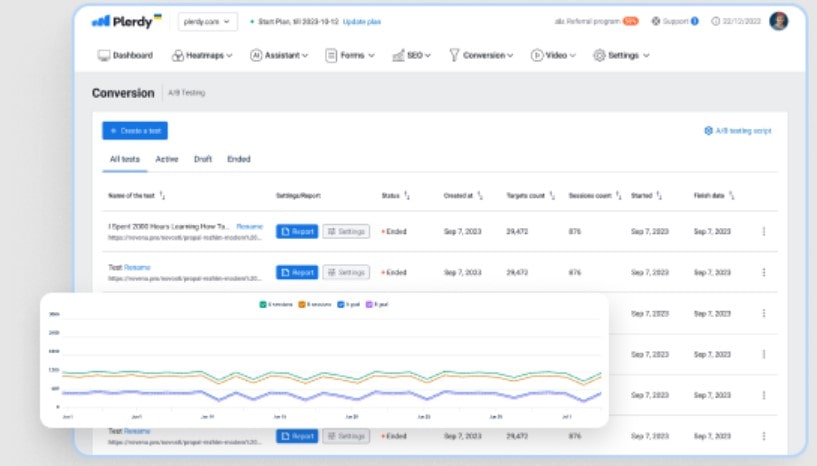

Plerdy’s Features for A/B Testing

Plerdy is a flexible solution meant to maximize the success of A/B tests by means of thorough conversion funnel analysis, detailed heatmaps, and session replay. These tools let companies see how consumers engage with several web page versions. Heatmaps can illustrate where people most often click, therefore guiding the identification of the most interesting components of a version. Session replay provides a video-like replaying to see how users negotiate each version, therefore offering insights beyond simple analytics. Validation of assumptions regarding user behavior and preferences depends much on this real-time data.

Plerdy’s conversion funnel analysis allows you to see how variations in each variant influence user movement in the marketing funnel through important stages. Understanding which variant increases conversion rates and why depends on this information, which helps marketers choose which components to change or completely replace.

Case Studies Using Plerdy

Many businesses have greatly made use of Plerdy’s features to enhance their A/B testing results. For example, an e-commerce website evaluated two different product page layouts and found—using Plerdy’s heatmaps—that a simpler design produced 20% more conversions. Another instance is a SaaS company who used session replay to investigate why one sign-up form variant performed better than another, hence increasing user registration by 15%.

Plerdy helps the creation and validation of hypotheses as well as offers thorough analysis that guides strategic improvements. Including Plerdy into the process is a wise action for marketers trying to improve their A/B testing performance. This makes data-driven decisions able to increase user involvement and conversions. Visit Plerdy’s official website to see how your A/B testing approach might be transformed.

In conclusion

As this essay has shown, achieving better conversion rates and deeper user involvement depends on mastery of A/B testing and hypothesis development. Every method and tool covered—from creating solid hypotheses to using platforms like Plerdy—empowers marketers to make informed, fact-based decisions. The trip still goes on. Explore more tactics and ideas in more depth on our blog to support your attempts at digital marketing.

Are you ready to improve your methods of A/B testing? Review Plerdy’s extensive tool set to improve your testing capacity and boost the performance of your website. Visit our Plerdy blog for additional perceptive pieces and professional guidance to start your road towards optimization right now.