A/B testing seems to be like cracking a riddle. You are on a search to find which of your two versions is the hero. The hitch is that, without monitoring the appropriate benchmarks, it’s like looking for hints blindfolded. Metrics are the pulse of your trials, revealing what clicks (really) and what flops; they are not just numbers.

Whether you are running ads for behemoths like Amazon or hustling as a startup, digital marketers—know one thing: every click counts. Using Google Optimize or Plerdy, you can convert those “what-ifs” into statistically supported gains. And the best thing about it? It is not rocket science.

All set to dig in and master A/B testing success? Let’s roll now!

What Are A/B Testing Metrics?

Definition and Role of A/B Testing Metrics

Your magic weapons to find what performs best in your tests are A/B testing metrics. Consider testing two website headlines: stats reveal which one keeps readers interested or clicking “Buy Now.” They are your A/B experiment’s road map, helping you to find the factors behind success. Testing without benchmarks is like darkly guessing. These numbers transform your A/B test from a haphazard gamble into a clear strategy.

Primary vs. Secondary Metrics: Key Differences

A/B test metrics vary in nature. Main results you wish for are shown by primary metrics like conversion rates. Your main concentration should be more sales, if that is your test aim. Though they provide additional information, secondary measures such as scroll depth do not directly gauge success. Understanding what is happening in your testing and enhancing next studies depend on both kinds of measurements.

Examples of Commonly Used Metrics

- Conversion RATE: shows how many people finish a task, say registering.

- Tracks interaction with CTRs during A/B tests.

- Reveals in your test variations how far users descend your page.

- Bounce Rate: Shows whether or not guests leave without interacting.

Track these stats with Plerdy or Hotjar, then make every A/B test a winner!

How to Choose the Right A/B Testing Metrics

Aligning Metrics with Business Goals

Selecting A/B test criteria without a specific objective? great error. Ask yourself, first: “What do I need to learn from this test?” Are you trying for a lower bounce rate, more clicks, or better conversion rates? Choose measurements that fit your aim, whatever it is. Track conversion rate, for instance, if your A/B test is about enhancing checkout flow. Pay close attention to scroll depth if you are trying content placement. The greatest metrics immediately relate your test findings to the expansion of your company.

Frameworks for Metric Selection

Choose metrics for your A/B tests using proven frameworks to keep on target instead than guessing:

- Tracks happiness, involvement, adoption, retention, and task success under the HEART Framework. Perfect for maximize user experience.

- Focusses acquisition, activation, retention, referral, and revenue in pirate metrics (AARRR). Ideal for whole user trips.

- Pinpoints one key metric—such as revenue per user—that will direct all A/B testing initiatives.

These kinds of frameworks help you to ensure you measure what truly counts and keep your tests orderly.

Common Pitfalls in Metric Selection

Here’s how not to screw your A/B test:

- Selecting too many metrics—pay attention to one or two main ones.

- Ignoring other factors (holiday traffic increases).

- Employing “vanity metrics”—that is, just page views—that fail to reflect actual achievement.

Good A/B testing measures produce insights rather than only facts. Every test brings one step closer to success since tools like Plerdy simplify tracking the appropriate measures!

Top 10 A/B Testing Metrics Explained

Conversion Rate

The rockstar of A/B testing measures is conversion rate. It indicates the degree to which your test variants convert visitors into leads, subscribers, or customers. Your conversion rate, for instance, is 10% if 1,000 individuals land on your A/B test page and 100 of them finish the action—buy, register, etc.? Though basic arithmetic, it is really powerful.

How might one raise the A/B test conversion rate?

- Simplify forms; eliminate pointless fields—no one else requires your pet’s name.

- Put trust badges or testimonials in your variation to inspire confidence.

- Click several CTA buttons. “Buy Now” could beat “Start Today.”

Click-Through Rate (CTR)

The measure indicating whether your A/B test is attracting attention is click-through rate. It gauges, in relation to user count, the proportion of clicks on a link, button, or advertisement. A/B testing with CTR enables you to pinpoint user interaction’s motivating factor.

How may A/B tests help to raise CTR?

- Try strong CTAs—“Grab the Deal” instead of “Learn More.”

- Test positioning or color of the buttons. Maybe it’s red; a green button might win.

- Create interesting, succinct headlines that pique users’ curiosity.

Bounce Rate

The villain of your A/B test measurements is bounce rate. It is the proportion of guests that land on your page and leave right away without engaging. One should probe further if one test variation shows a better bounce rate.

How might A/B testing help to lower bounce rate?

- Speed up your page load time; a one-second delay can boost bounces by thirty-two percent.

- Look at test headlines with obvious value. Headlines that are confusing kill engagement.

- Guide users across your A/B test variant with clear navigation.

Scroll Depth

Scroll depth gauges page visitors’ down-to-earth travel distance. For blogs, product pages, and long-form content especially, it’s the ideal A/B testing metric. Should users stop scrolling halfway, your design or content may need some changes.

How may scroll depth in A/B tests be improved?

- Add interesting graphics including animations, movies, or charts.

- See what grabs people more by testing shorter versus longer materials.

- For simpler scanning, break up content with headers and bullet points.

Retention Rate

A/B testing aiming at loyalty depend critically on retention rate. It reveals the number of visitors who come back following their first one. Usually, a high retention rate indicates that your A/B test variation precisely values or user experience.

How to raise the retention rate in A/B testing?

- Test customized experiences—recommendations or dynamic content.

- Provide special offers for coming back visits.

- Return users to your site with follow-up emails or alerts.

Session Duration (Average Time on Page)

Session length indicates user remain on your A/B test page. Longer sessions indicate your material is interesting; shorter sessions indicate something’s not clicking (pun intended).

How might one extend the session duration in an A/B test?

- Add interactive components as embedded videos, polls, or quizzes.

- Test narrative structures; users linger for a fantastic story.

- Keep users browsing your page variants by including relevant content links.

Cart Abandonment Rate

Measures of cart abandonment rate indicate the frequency of item additions by users to their cart but neglect of the transaction. For e-commerce, it’s a fundamental A/B testing statistic.

Using A/B tests, how may one lower cart abandonment?

- Test checkout systems; less steps usually translate into better completion rates.

- Show shipping charges up front to prevent surprises.

- Discounts for abandoned carts could come from exit-intent pop-ups.

Customer Satisfaction Score (CSAT)

Following interactions with your A/B test variant, CSAT gauges user satisfaction with your good or service. Surveys can reveal the version that brings people most pleasure.

How to raise A/B Testing’s CSAT?

- Short feedback forms—nobody wants a 20-question grilling.

- Test many support lines (live chat against FAQ page).

- Follow up with low scoring consumers to address their pain areas.

Revenue

In A/B testing, revenue is the only metric that counts ultimate. It counts the money your test variant earns. Testing pricing models or upsell techniques calls especially for this.

How may A/B tests help to boost income?

- Test prices let you identify the factors influencing the most sales.

- Combining items will inspire more spending.

- Use urgency; messaging about “Limited Offer” can increase purchasing.

Average Order Value (AOV)

AOV figures the average transaction spending amount. Using cross-selling and other techniques to raise AOV will help to greatly boost profitability.

How might AOV be raised in A/B? Testing:

- Give orders above a specific value free shipping.

- Try upselling high-end product variants.

- Recommend comparable goods right at the register.

Every one of these measures brings special value to your A/B testing approach. Get insights and raise your test results using Plerdy, Google Optimize, or Hotjar technologies. Combining several measurements can help you to clearly see what is and isn’t working. A/B testing is mostly about learning; so, keep testing and developing!

Best Practices for A/B Testing Metrics Analysis

Setting Clear Hypotheses Before Testing

Ask yourself, before starting an A/B test: what is the objective? You must have a theory tying your exam to the measures you are monitoring. For instance, adding a colorful CTA button will raise click-through rate by 20%. Simplify it yet keep it targeted. It won’t help to have a nebulous aim like “make the site better.” Start with a well-defined strategy since nobody enjoys performing tests just to later on to scratch their head.

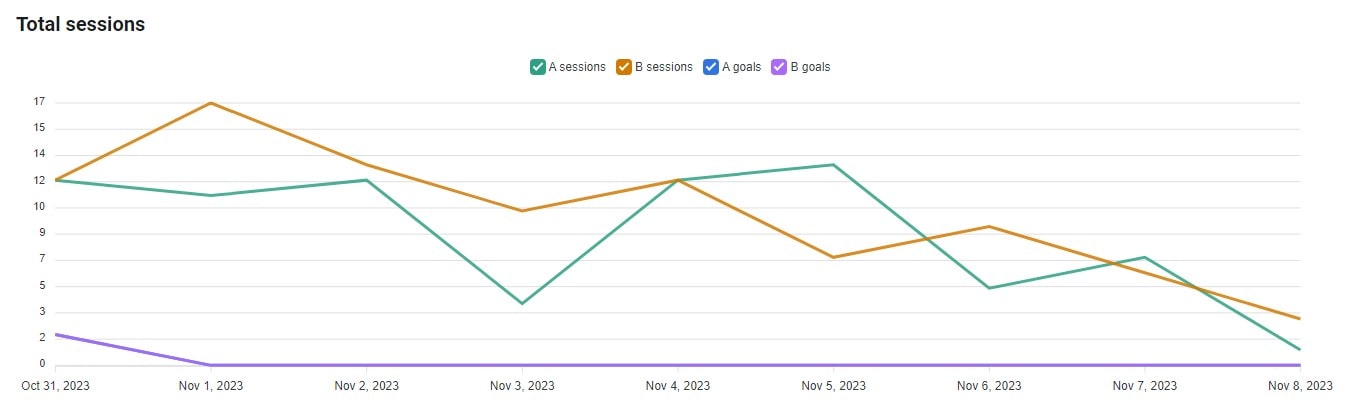

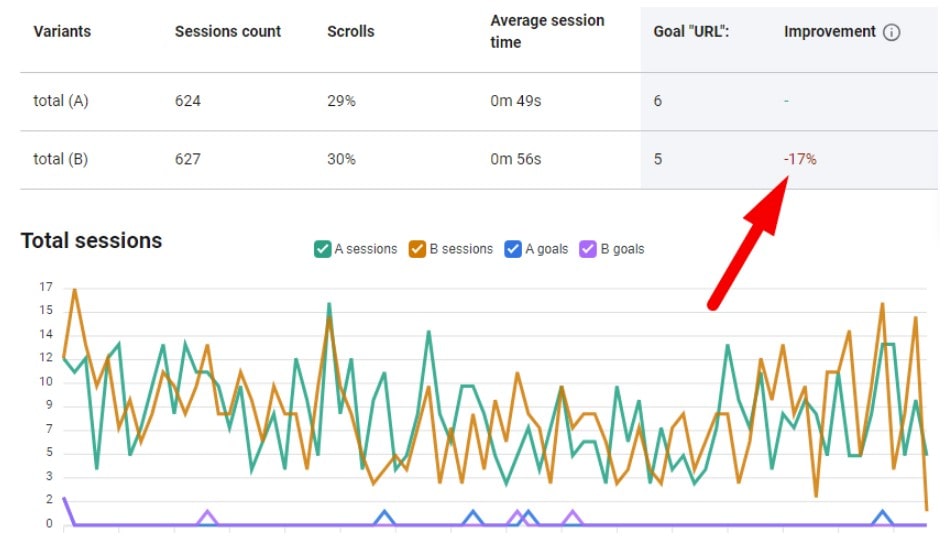

Achieving Statistical Significance

A/B testing’s magic sauce is statistical significance. Your outcomes without it are merely lucky guesses. Wait until your test generates sufficient data— clicks, views, or conversions—to boldly state one variation beats the others. For instance, never decide after ten visits. Confidence levels can be computed with tools like Google Optimize or Plerdy. Trust the arithmetic; not your instincts.

Analyzing Results Effectively

Your A/B test is complete; now, what? Explore the metrics. Has your main statistic—that of conversion rate—improved? To see the whole picture, also review secondary data such bounce rate. If at all feasible combine these figures with qualitative comments. Remember, it’s about finding the reason one version worked better rather than only stating a winner.

Avoiding Common Mistakes

Here’s how to stay from messing your A/B testing:

- Ignoring a conjecture: You’re only trying randomly without one.

- Stopping the test far too soon Statistical relevance depends mostly on patience.

- Not considering outside variables: Seasonal trends or holiday traffic skew outcomes.

- With an eye toward vanity metrics: Page views by themselves don’t define success.

- Testing too many changes at once: Simplify it—one variable at a time.

These ideas can help your A/B tests feel less random and more planned!

Practical Tips to Improve A/B Testing Metrics

Leveraging Tools for Accurate Tracking

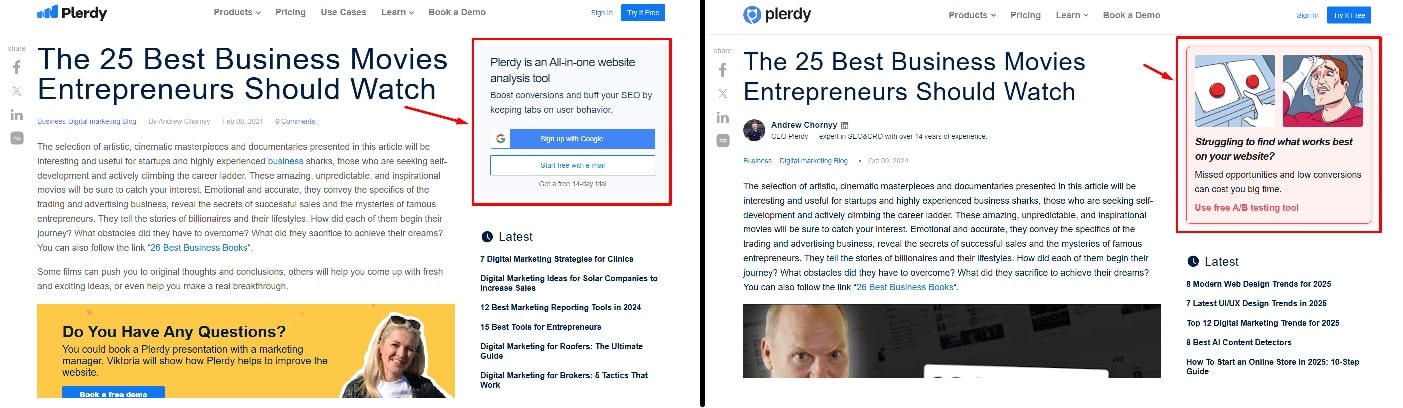

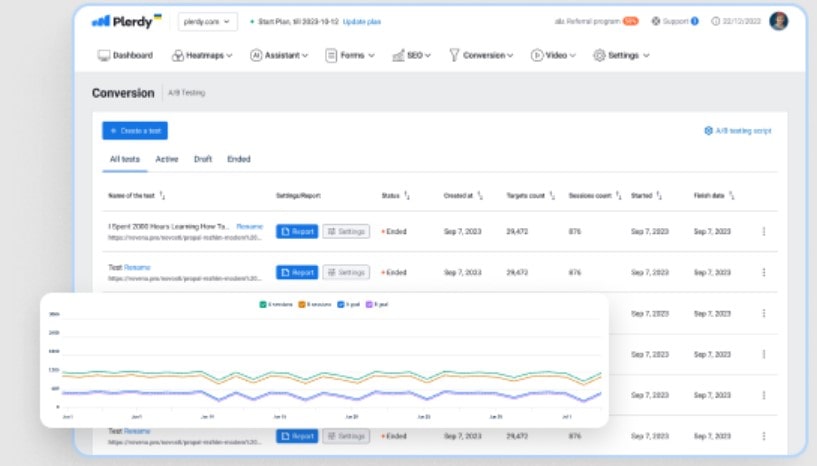

Conducting A/B tests without appropriate instruments? That is the path toward anarchy. Monitoring A/B data calls for accuracy, and the correct tools will rescue your test (and your day!). Start with heatmaps showing where people click, scroll, or totally ignore your A/B test variations from Plerdy. Session replays provided by tools like Hotjar or Microsoft Clarity enable you view user activity instantly.

You want more in-depth understanding? Track conversion rates, session lengths, and bounce rates for every variation by combining A/B testing systems including Google Optimize with Google Analytics. Want something fancy? Measure how A/B tests affect lifetime value or user retention with tools that interact directly with your CRM or email campaigns.

Suggestive tip: For a better knowledge, match measurements with visual data. Your “aha” may come from a heat map displaying clicks on Variation A instead of B.

Optimizing User Journeys Based on Insights

A/B testing is about creating better experiences rather than only numerical results. See your stats and wonder, “Why?” Are they interacting more with Variation A because of a strong CTA or departing Variation B because of unclear design? Metrics like click-through rate and scroll depth help direct your choices.

Simple adjustments can have a big impact:

- Test fresh CTAs: “Try for Free” might trample on “Learn More.”

- Shorten forms: Does anyone really have to input their shoe size?

- Raise speed: Ten percent of conversions can be dropped by a one-second delay.

Your secret weapon for precisely adjusting user paths is A/B testing. Make judicious use of insights; remember: even if a test fails, it teaches something. Just keep experimenting and optimizing!

In essence

Your digital marketing secret weapon is A/B testing stats. They are your road map for wiser choices, not only figures. Tracking important benchmarks including conversion rates, scroll depth, and bounce rates helps you find both successful and ineffective strategies. Layer by layer, you find the essence of user behavior—like peeling an onion.

All set to energize your approaches? Tools include Plerdy, Google Optimize, and Hotjar simplify setup, tracking, and learning from your experiments. Don’t let failure scare you; every test—including the difficult ones— teaches something worthwhile.

It now is your time. Start your next A/B test, delve into the statistics, and see how your conversions grow!