In the digital marketing maze, A/A tests are the unsung heroes, crucial yet often overlooked. These tests, a simpler counterpart to A/B testing, compare identical page versions to establish a reliable performance baseline. Key for marketers, especially in eCommerce, healthcare, and education, A/A tests validate analytics tools and confirm data integrity, setting the stage for accurate A/B testing and better user experience strategies.

Here’s why they matter:

- Baseline Measurement: For accurate performance benchmarks.

- Tool Validation: Checks analytics tools’ accuracy.

- Data Reliability: Ensures trustworthy data.

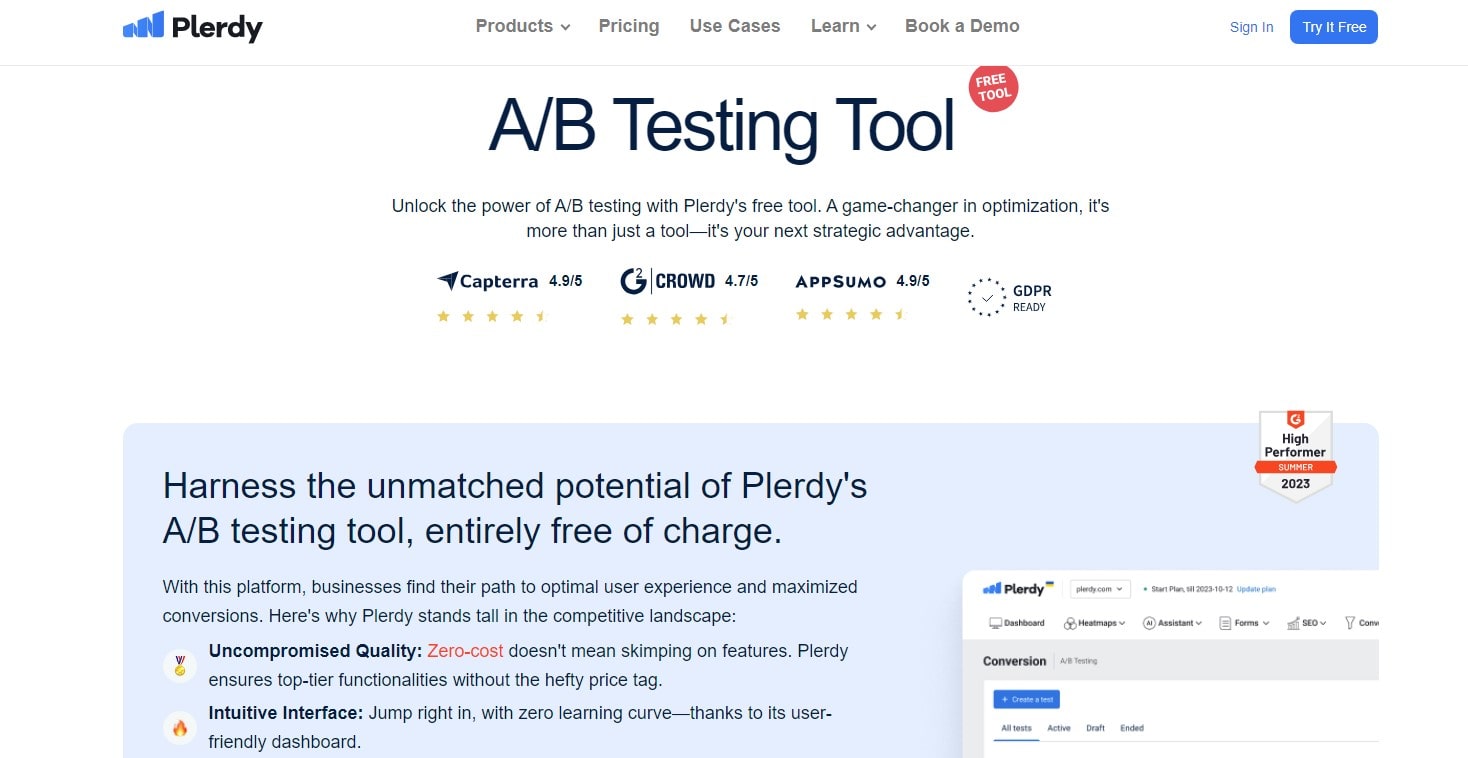

With tools like Plerdy, which analyzes user behavior and enhances CRO & UX, integrating A/A tests can significantly uplift digital strategies.

Explanation of the Concept of A/A testing

In the tapestry of modern data analysis, the A/A test emerges as a subtle yet pivotal stitch. Unlike its more celebrated counterpart, the A/B test, an A/A test operates under a unique premise – it deliberately compares two identical scenarios. Picture this: you’re examining two groups with no variations yet, tracking their performance as if they were different. This approach isn’t about seeking stark contrasts; instead, it’s a meticulous check of the testing environment itself.

The essence of an A/A test lies in its ability to serve as a calibration tool. By running two identical versions – a kind of mirror image in the testing landscape – analysts can confirm the reliability and accuracy of their test mechanisms. If the A/A test reveals significant discrepancies between the two groups, it signals an underlying flaw in the test setup or data collection process.

Here’s what sets an A/A test apart:

- Consistency Check: It ensures that the test environment is stable and consistent.

- Error Identification: Highlights anomalies in data collection or analysis methods.

- Baseline Establishment: Provides a clear baseline for future A/B tests.

The A/A test protects future trials through this approach. It’s akin to tuning an instrument before a concert; the melody only resonates if the basics are pitch-perfect. In essence, the A/A test isn’t about discovering the new; it’s about ensuring that it’s done on a sound, reliable stage when the new is tested.

The Purpose of A/A Testing

In the intricate dance of data-driven decision-making, the purpose of an A/A test often plays a subtle yet significant role. This testing methodology, seemingly counterintuitive at first glance, involves comparing two identical segments or scenarios – essentially, testing the same thing against itself. But beneath this mirrored surface lies a strategic depth.

The A/A test is a foundational check, ensuring the analytical framework is free from bias and error. It’s like setting the stage before the main act, guaranteeing that the performance metrics are tuned perfectly for the show – the more complex A/B tests.

Key Purposes of an A/A Test:

- Baseline Establishment: It creates a reliable baseline against which changes can be measured.

- Error Detection: Identifies anomalies in data collection, ensuring accuracy in results.

- System Calibration: Adjusts and fine-tunes the testing system for optimal performance.

- Validity Confirmation: Validates the testing process, confirming that external variables do not influence outcomes.

Organizations can confidently stride forward by conducting an A/A test, knowing their testing environment is a well-oiled machine primed for accurate and insightful experimentation. The A/A test silently protects data-driven initiatives in digital marketing and healthcare. It proves that staring in the mirror can be the most revealing activity, ensuring precision and honesty.

Differences Between A/A and A/B Testing

Navigating the labyrinth of data analysis, one often encounters the crossroads of A/A and A/B testing – two paths diverging, each with its distinct purpose and methodology. A/A tests, generally considered the quieter sibling, compare two similar scenarios to verify test correctness and repeatability. It’s like a dress rehearsal before the grand performance, ensuring that the stage – the test framework – is set flawlessly.

Conversely, the A/B test steps into the spotlight, pitting two versions against each other. This is where the action unfolds, revealing which variant performs better based on specific metrics. It’s the main act, the culmination of hypotheses and creativity, testing whether a new feature or approach outshines the existing one.

Key Differences:

- Purpose: A/A tests calibrate and validate the testing environment; A/B tests compare different versions to find the superior one.

- Methodology: A/A tests mirror scenarios to check for anomalies; A/B tests involve distinct variations.

- Outcome Use: Results from A/A tests fine-tune the testing process; A/B test results inform strategic decisions and innovations.

These two tests play pivotal roles in fields as diverse as web design, marketing, and product development. While the A/A test ensures that the testing grounds are solid and unbiased, the A/B test leaps forward, testing the waters of change and innovation. Each serves a unique purpose, and together, they form a cohesive strategy for data-driven decision-making, turning insights into impactful actions.

Designing an A/A Test

Crafting an A/A test is akin to setting up a controlled experiment in a laboratory – precision and attention to detail are paramount. A/A tests are simple and subtle: create two similar situations and see if the test environment impacts the results.

Here’s a step-by-step approach to designing an effective A/A test:

- Define Objectives: Clearly understand why you’re conducting the A/A test. It’s about ensuring the reliability of your test infrastructure rather than testing a hypothesis.

- Select Metrics: Choose the metrics to compare the two groups. These range from click-through rates to user engagement levels, depending on the context.

- Create Identical Groups: Ensure the two test groups are as similar as possible. This includes demographics, behaviors, and any other relevant characteristics.

- Implement the Test: Launch the A/A test across your chosen platforms, ensuring that everything from the timing to the audience is consistent.

- Monitor and Analyze: Monitor the test results, looking for significant differences between the two groups. Any disparity indicates a potential issue in your testing setup.

- Adjust and Iterate: Use the insights from the A/A test to refine your testing process, making it more robust for future experiments.

In industries like e-commerce, digital marketing and software development, the A/A test plays a crucial role. It serves as a sanity check, ensuring that when you conduct an A/B test, the results you’re measuring are due to the changes you’ve implemented and not a quirk of the test system. An A/A test is about building a strong foundation – it’s a testament to the adage that good fences make good neighbors, ensuring that each test stands on its own merits.

Key Metrics in A/A Testing

In A/A testing, the metrics wield the power to either validate the sanctity of your test environment or raise red flags. It’s a bit like being a detective in a world of numbers – you’re looking for clues that tell you whether your testing ground is as neutral as Switzerland or if there’s an unseen bias skewing your results.

While seemingly straightforward, the key metrics in A/A testing are the linchpins in ensuring your A/B tests will later stand on solid ground. Here’s a rundown of the critical metrics to keep under your lens:

- Conversion Rates: This is your go-to metric, revealing if there’s any significant variance in user actions across the two groups.

- User Engagement: Measures like time on site or page views per session can signal if there are underlying discrepancies in the test setup.

- Bounce Rates: High variance in bounce rates between groups could indicate issues in user experience consistency.

- Click-Through Rates (CTR): Essential for gauging whether navigation and user interaction are consistent across both test groups.

- Traffic Sources: Ensuring both groups receive traffic from similar sources is crucial for a fair comparison.

In sectors from e-commerce to digital marketing, these metrics are not just numbers – they’re the compasses that guide you through the murky waters of data interpretation. By meticulously analyzing these metrics, an A/A test ensures that when you’re ready to embark on the journey of A/B testing, your ship is not only seaworthy but poised to navigate the tides of data with precision. This meticulous approach in A/A testing separates a well-oiled, data-driven strategy from one shooting in the dark, hoping to hit the target.

Statistical Significance in A/A Testing

In the universe of A/A testing, statistical significance is the North Star, guiding analysts through the nebulous sky of data interpretation. This concept, while often associated with A/B testing, also plays a crucial role in A/A tests, serving as a litmus test for the soundness of the testing environment.

At its heart, statistical significance in an A/A test answers a fundamental question: Are any observed differences between the two identical groups just random noise, or are they indicative of a flaw in the testing setup? Here’s how it breaks down:

- P-Value Assessment: In A/A testing, the p-value, a measure of statistical significance, should ideally be high, indicating no significant difference between groups.

- Confidence Interval: This range should be narrow and similar across both groups, ensuring consistency in the test environment.

- Sample Size: To reduce error and assure accurate results, sample sizes must be large.

- Consistency Across Metrics: Statistical significance should be evaluated across various metrics to ensure comprehensive reliability.

In fields like digital marketing, e-commerce, and UX design, understanding statistical significance in A/A tests is pivotal. It prevents unbalanced testing setups from misguiding A/B tests. It’s like ensuring the scales are balanced before weighing something precious – you need to trust the tools before you can trust the results.

In essence, statistical significance in A/A testing isn’t just a number or a threshold; it’s a beacon of reliability, shining light on the integrity of your testing process. It’s what separates a hunch from a sound, data-driven strategy, ensuring that every decision is rooted in accuracy, not chance.

Common Pitfalls in A/A Testing

Even the most meticulously designed A/A test is not immune to pitfalls. These hidden traps can skew results, leading even the most seasoned analysts astray. Recognizing these typical mistakes is like walking through a minefield—one false move compromises your test..

Key Pitfalls in A/A Testing:

- Inconsistent Test Groups: Even minor differences in demographics or behavior between groups can introduce bias, skewing results. Both groups must mirror each other perfectly.

- Fluctuating Sample Sizes: Inconsistent sample sizes can lead to significant variances in results. Ensuring that both groups have a comparable number of participants is essential.

- Timing Discrepancies: Conducting the A/A test during different time frames can introduce external variables, such as seasonal changes or market trends, affecting the test’s validity.

- Overlooking External Factors: Failing to account for external influences, like marketing campaigns or platform updates, can result in misleading conclusions.

In industries ranging from digital marketing to user experience design, these pitfalls can render an A/A test ineffective, misleading teams into making decisions based on flawed data. Like a captain steering through foggy seas, an analyst must navigate these pitfalls cautiously, ensuring their A/A test is as robust and reliable as possible. The goal is not just to conduct a test but to glean accurate insights that pave the way for successful A/B testing and, ultimately, informed decision-making.

Analyzing A/A Test Results

Analyzing A/A test results is akin to being a data detective – it’s all about uncovering the story hidden in the numbers. This phase is crucial; it’s where you confirm that your testing environment is a blank canvas, unbiased and ready for the true experiment: the A/B test.

Key Steps in Analyzing A/A Test Results:

- Compare Key Metrics: Look for discrepancies between the two groups’ conversion rates, click-through rates, or any other primary metrics. Remember, in an A/A test, these should be nearly identical.

- Assess Statistical Significance: Use statistical tools to determine if any differences are just due to chance or if they point to a problem in the test setup.

- Evaluate Consistency Over Time: Analyze how these metrics perform throughout the test. Consistent results over time indicate a stable test environment.

- Identify Anomalies: Any irregularities, like sudden spikes or drops in the data, could suggest external factors affecting the test.

In sectors like e-commerce, digital marketing, and user interface design, the insights gleaned from an A/A test are invaluable. They lay the groundwork for future tests, ensuring that you build on firm, reliable ground when you move on to A/B testing.

Analyzing A/A test results isn’t just a step in the testing process; it’s a safeguard. It guarantees a controlled, neutral setting for testing new ideas. It’s the assurance that your insights reflect user behavior and preferences, not skewed by a flawed testing setup. It’s the foundation upon which reliable, data-driven strategies are built.

Quality Assurance Through A/A Testing

In data-driven decision-making, A/A testing stands as a sentinel of quality assurance. This unsung hero works quietly behind the scenes, ensuring that the stage for A/B testing is set with unwavering precision and reliability.

At its core, A/A testing serves as a robust check against false positives, a common pitfall in the dynamic world of data analysis. By comparing two identical scenarios, any significant differences in outcomes point to potential flaws in the testing process. This strategy protects future A/B tests.

The role of A/A testing in quality assurance includes:

- Baseline Accuracy: It establishes an accurate baseline for comparison in future tests.

- Detection of Flaws: Identifies issues in the testing setup, including software glitches or sampling errors.

- Validation of Data Collection Methods: Ensures that the methods used to gather and analyze data are sound and reliable.

- Refinement of Testing Processes: Offers insights for refining and enhancing testing protocols and strategies.

In industries where precision is paramount – like pharmaceuticals, digital marketing, and software development – the A/A test is a critical tool. It’s akin to calibrating a sophisticated instrument; without this step, the readings you rely on could lead you astray.

In summary, A/A testing is much more than a mere formality. It’s an essential process that upholds the standards of accuracy and reliability in any data-driven environment. By meticulously validating the framework, A/A testing lays the groundwork for meaningful insights and informed decisions, ensuring that each step forward is taken on solid ground.

A/A Testing and User Experience

In user experience (UX), A/A testing emerges as an unsung hero, a behind-the-scenes force ensuring the stage for optimal user interactions. It’s a foundational step, often overlooked yet vital, in crafting an environment where every user action delivers insights untainted by underlying system biases.

The intersection of A/A testing and UX can be highlighted as follows:

- Ensuring Accurate User Behavior Tracking: A/A testing verifies that the tools used to monitor user behavior function correctly, providing a true representation of user interactions.

- Baseline for User Experience Metrics: It establishes a reliable baseline, crucial for accurately assessing changes in UX during subsequent A/B tests.

- Detecting Anomalies in User Interaction Data: Any significant variance detected in an A/A test points to potential flaws in how user data is collected or analyzed.

- Refining User Experience Research Methods: Insights from A/A testing help refine research methodologies, ensuring that future UX studies are based on solid, unbiased data.

In industries where UX is paramount – from digital platforms to mobile app development – the role of A/A testing is critical. It is a guardian of data integrity, ensuring that changes are made to enhance user experience based on accurate, reliable user interaction data.

A/A testing, in this context, is not just a preliminary step; it’s a fundamental part of a larger strategy aimed at understanding and enhancing how users interact with products and services. It ensures that decisions to improve UX are grounded in reality, not skewed data, setting the stage for genuine, impactful user experience enhancements.

Tools and Software for A/A Testing

In the meticulous world of A/A testing, the right tools and software are akin to a craftsman’s cherished toolkit – essential for precision and efficiency. These digital instruments streamline the A/A test process and enhance accuracy, ensuring that the insights gleaned are as clear as a bell.

Leading Tools and Software for A/A Testing:

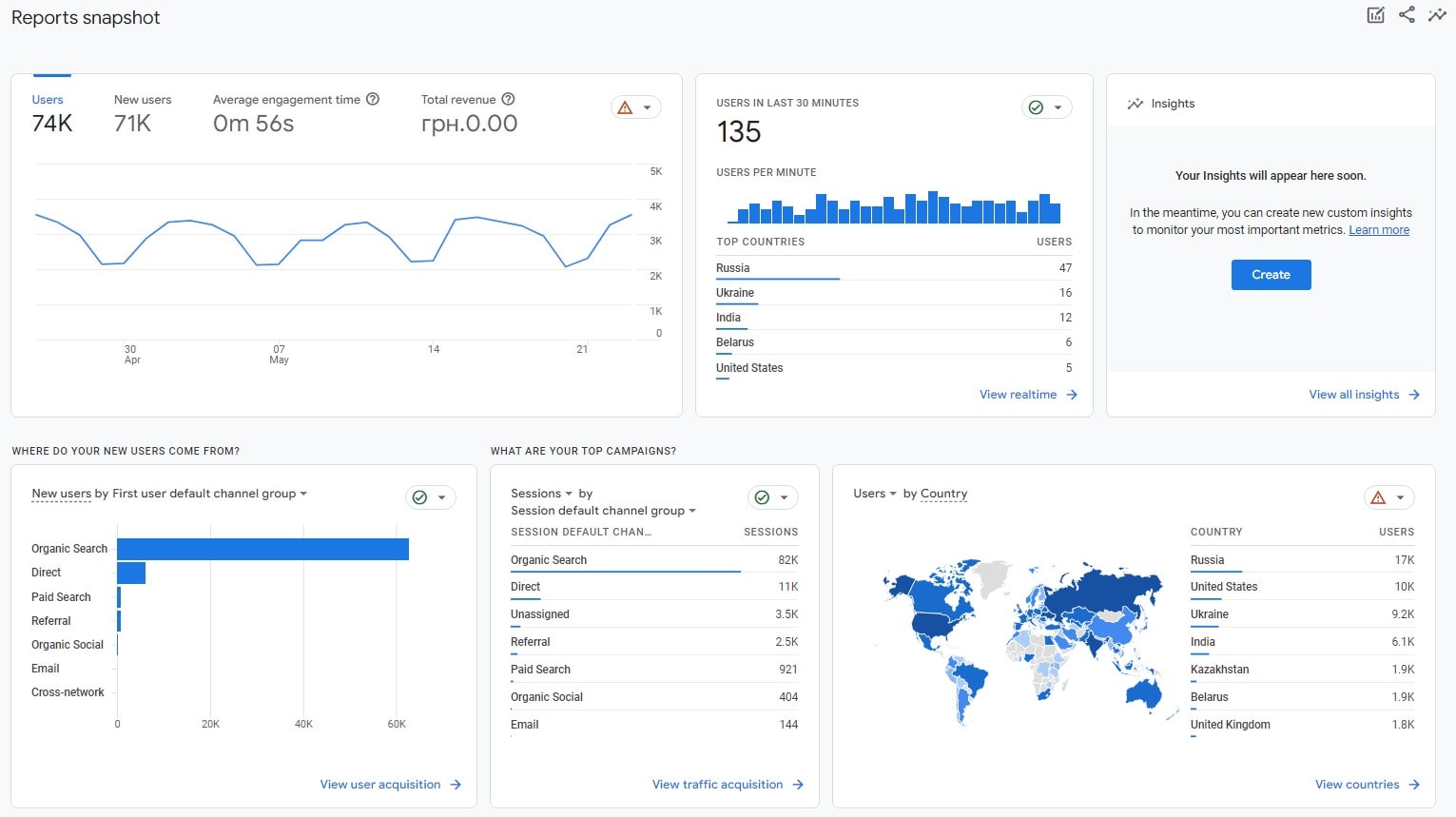

- Google Analytics: A staple in digital analysis, it offers robust features for tracking user interactions and comparing metrics across different test groups.

- Optimizely: Renowned for its user-friendly interface, Optimizely allows seamless setup of A/A tests, making it a favorite among marketers and UX designers.

- Adobe Target: Offers sophisticated testing capabilities, including A/A tests, and is particularly well-suited for enterprises seeking deep integrations with other Adobe products.

- VWO (Visual Website Optimizer): Known for its intuitive design, VWO provides a comprehensive suite of features for A/A and A/B testing, including detailed segmentation and analysis.

These tools, each with unique strengths, cater to various industries, from e-commerce to software development. They demystify the process of A/A testing, transforming it from a daunting task into a manageable, even enjoyable, part of the data analysis journey.

In essence, the project’s needs and goals determine the A/A testing tool or software. Whether it’s the simplicity and wide reach of Google Analytics or the detailed, enterprise-level capabilities of Adobe Target, these tools are the unsung heroes in the background, ensuring that every A/A test is not just a step but a stride towards accurate, data-driven decision-making.

Conclusion

As we wrap up our exploration of A/A tests, it’s clear that they are more than just a preliminary step in optimization strategies – they are a lynchpin in ensuring that your marketing efforts hit the mark. Whether running an eCommerce store, managing a healthcare platform, or nurturing an educational app, A/A tests provide a critical foundation for accurate data interpretation and decision-making.

Key takeaways from A/A tests include:

- Unmatched Baseline Accuracy: Establishes a solid foundation for further tests.

- Tool and Software Reliability: Validates the functionality of your chosen tools and software.

- Enhanced User Insight: Offers deep dives into user behavior and preferences.

Remember that an A/A test is meant to make your testing ground as steady as an island amid calm waters, not to find wild variances. When you run an A/A test, you’re not just checking boxes – you’re setting the stage for every subsequent test to be more meaningful and impactful.

As we conclude, consider Plerdy’s robust suite of tools for CRO & UX. Their platform, adept at capturing and analyzing intricate user interactions, can turn the insights from your A/A tests into actionable strategies. Whether you want to refine your targeting, understand visitor cycles, or optimize your sales funnel, integrating A/A tests with Plerdy’s analytics can elevate your business. Let’s not just run tests – let’s run them smartly and transform data into growth.